We need an environment

The World should be easy to interact with

Today I just started something simple: An Object to represent a simulated World in which an Agent can move.

As the “Agent” will need to interact with the World, and the World should return rewards, and understand positions, etc., I decided in this case to go the way of R Objects, more specifically “setRefClass”.

For today, I just created a basic object to generate a “random world” that can have walls, food, even enemy(ies) and in which we can throw an agent at random in any empty space.

EDIT: Added Agent movements and visualizations

A few peculiarities maybe: I think it will be simpler in the future to not have to deal with “borders” from the Agent perspective, and so I set my Worlds to necessarily be walls all around.

A random policy agent in a random world

Let’s start today with a simple video showing the results. The agent is the blue thing. Food is green, enemy is red, black are walls.

All is initialized randomly, and so are the moves of the current agent. On the left, I show the agent’s perspective/perception (allocentric) of the World. On the right, the World as it is at each step:

Videos are always more satisfying than matrices, but to understand what happens, let’s have a look under the hood…

Today’s results in Matrix Format

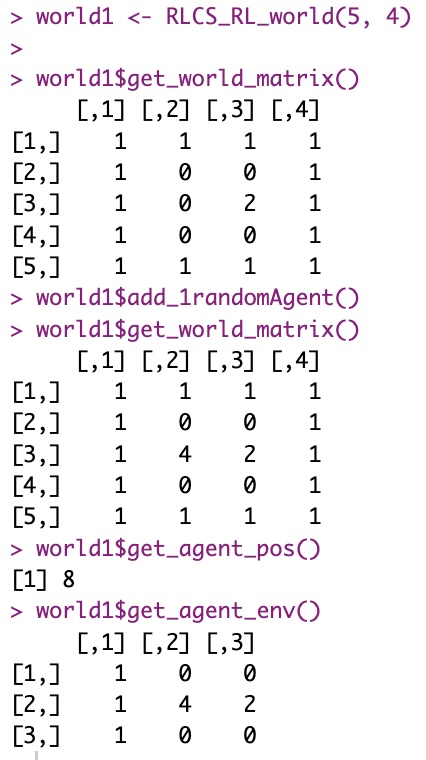

So in my matrix, I choose 0 = empty cell, 1 is a wall, 2 is food, 3 is an enemy (defaults to no enemies). And 4 will be an agent. This is all arbitrary, but when in the future I encode into binary there will be a specific order, as this will be relevant for the GA to work with.

Anyhow, it looks like so:

In this “world”, I needed to add methods to move around (from-to positions, or just “to”), control for ilegal moves (can’t move into a wall), return rewards: good +10 for food, bad -100 if enemy, and empty and walls, well, to be refined.

All very simple, but cool at the same time: I can already render “animations” of said worlds… Albeit with a very stupid agent for now…

What next?

Well, I’m afraid now I have most of the pieces. But still:

I will need to implement a variation of my existing RLCS code to account for four possible actions (for now, actions are binary, that’s not enough).

I will need to add a sensible binary encoding for the agent to interpret its visible environment

I will need to account for rewards, change the design for the RL case where I need to add prediction and an Action Set

…

Quite a bit of work still. But all fun.

TO BE CONTINUED…