I’m not sure yet… If I can do it

Although I know in theory…

At least the XCS version of LCS algorithm is a “well-known” (in the rather small sub-world of LCS enthusiasts, that is) approach to do Reinforcement Learning.

Then again, all step-by-steps for RL in LCS seem to be hidden in more or less archane papers which are pay-per-access, and I don’t know yet if things make enough sense for me to pay for reading said papers. (Until recently, I had access with my University’s MSc Student account… No more, it would seem. Fair enough.) We’ll see how I go about that later…

But for now…

General understanding of RL and LCS: My own mix

Well, what I can do, is try to come up with my own personal version of an RL implementation with my RLCS “future-package”, see if I can make it work, without worrying about past work too much for now.

I do have a general understanding of ideas of LCS and RL, I just haven’t found a detailed step-by-step for me to copy from pseudo-code. I also have been reading (but I’m far from done) what I understand is the bible of Reinforcement Learning, i.e. “Reinforcement Learning - An Introduction” by R. S. Sutton & A. G. Bardo. The general ideas of TD and Q-Learning I think I somewhat grasp, and I have my own (limited, sure) experience with Monte-Carlo.

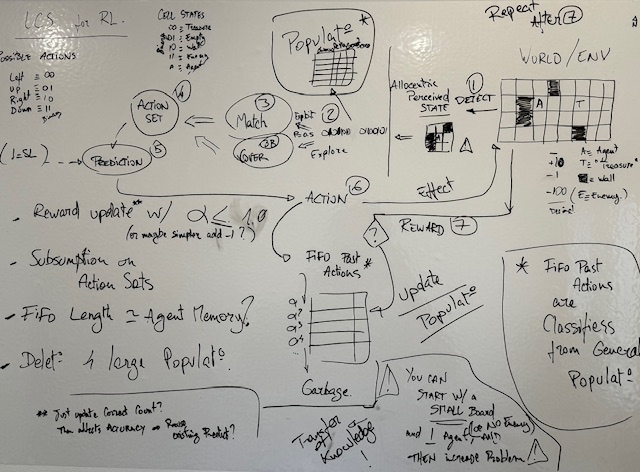

Mixing these concepts with what I have implemented thus far of an LCS, adapting my code with concepts of Action Sets instead of Correct Sets, detect/action/effect/reward with an environment, and a split between exploit and explore, I came up with this “design” this morning (Saturday and Sunday mornings are often a bit quieter than the rest of the week):

This is all on my small home-office wall right now, not at all implemented, but it seems it should be feasible, and I am curious as to whether it could work (or be a complete bust).

Why even consider RL?

Well, truth being told… I don’t know.

It “sounds” like a cool thing to be able to do. In the above drawing, I suggest I could, using an LCS, implement an “agent” (yes, one of those, but please, I’m not talking about LLMs) that would learn on its own how to navigate a simple grid-world and look for food.

I will have to code the whole logic and rules of that world, all aspects of movements in it, rewards, etc. It’s going to be a bit of work (somehow I think I’ll manage).

But yeah, I don’t know a few things at this point: whether my “design” will work (with whatever adjustments I can come up with), or why even program an RL algorithm.

I just think it would be cool to have this future package be able to do all of data mining, supervised learning and reinforcement learning, with examples of each.

As to when I could use it, I know the answer for data mining and SL – not so much for RL, for now.

Conclusions

Just another fun exercise, really. It will take a few weeks, probably.

But if (big if at this stage) I manage to make it work, that would mean I actually understand enough of LCS as a concept to implement it from scratch and do data mining, Supervised Learning and Reinforcement learning. In turn that would also imply I know a bit more of what Reinforcement Learning entails.

Which in itself – for me – would already be pretty cool.

References

To be fair, there are a few papers out there I was able to access for free. In no special order and particularly valuable to inspire my future exercise I found these:

https://arxiv.org/abs/2305.09945